Research

- Projects:

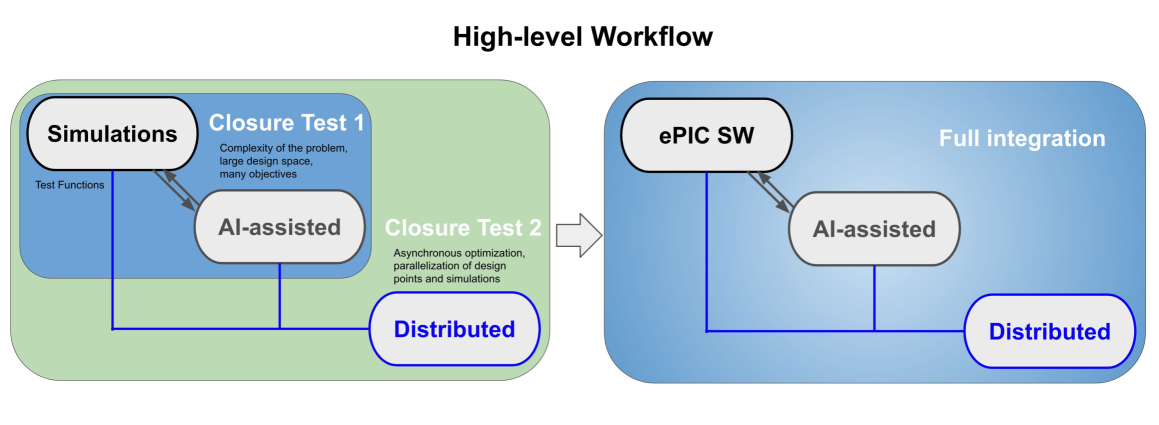

- AI-assisted Detector Design for EIC (AID2E): (ongoing DOE-funded grant) Artificial Intelligence is poised to transform the design of complex, large-scale detectors like the ePIC at the future Electron Ion Collider. Featuring a central detector with additional detecting systems in the far forward and far backward regions, the ePIC experiment incorporates numerous design parameters and objectives, including performance, physics reach, and cost, constrained by mechanical and geometric limits. This project aims to develop a scalable, distributed AI-assisted detector design for the EIC (AID(2)E), employing state-of-the-art multiobjective optimization to tackle complex designs. Supported by the ePIC software stack and using Geant4 simulations, our approach benefits from transparent parameterization and advanced AI features. The workflow leverages the PanDA and iDDS systems, used in major experiments such as ATLAS at CERN LHC, the Rubin Observatory, and sPHENIX at RHIC, to manage the compute intensive demands of ePIC detector simulations. Tailored enhancements to the PanDA system focus on usability, scalability, automation, and monitoring. Ultimately, this project aims to establish a robust design capability, apply a distributed AI-assisted workflow to the ePIC detector, and extend its applications to the design of the second detector (Detector-2) in the EIC, as well as to calibration and alignment tasks. Additionally, we are developing advanced data science tools to efficiently navigate the complex, multidimensional trade-offs identified through this optimization process [https://arxiv.org/abs/2405.16279]

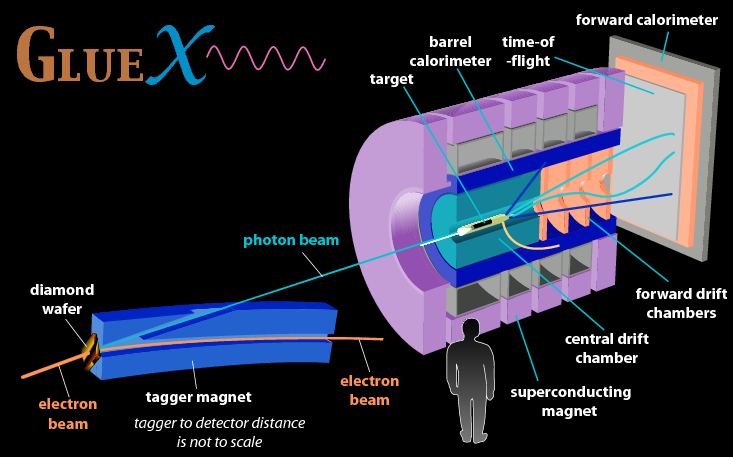

- AI-Optimized Polarization (AIOP): (ongoing DOE-funded grant) This project aims to enhance the average polarization of polarized cryo-targets and polarized photon beams at Jefferson Lab by implementing continuous AI/ML control. Improved polarization will boost signal statistics for a given beam time and stabilize polarization during experiments, moving towards self-driven experiments and cost savings in accelerator operations. Polarized cryo-targets in Halls A, B, and C use ammonia at very low temperatures (around 1K) in strong magnetic fields (~5T) with microwaves (~140GHz). Achieving optimal polarization depends on multiple variables including temperature, microwave energy, and the distribution of paramagnetic radicals, all of which change over time. Traditionally, experimenters manually adjust the microwave frequency based on feedback from an NMR signal, which has some uncertainty due to background fluctuations. This project will use AI/ML to continuously optimize microwave frequency and extract cleaner NMR signals for better feedback. In Hall-D, a thin diamond radiator generates linearly polarized photons, whose energy spectrum enhancement is adjusted by rotating the diamond. Currently, the enhancement peak drifts due to changes in beam conditions and diamond degradation, requiring manual adjustments by experimenters. The project will use AI/ML to continuously adjust diamond angles to maintain high polarization and monitor beam conditions and detector performance. AI/ML will map radiation damage to the diamond and recommend when to use different diamond sections to mitigate degradation.

[https://wiki.jlab.org/epsciwiki/index.php/AI_Optimized_Polarization]

- AI for fusion Summer School: (ongoing DOE-funded grant) An intensive 2-week summer school focused on undergraduate students with backgrounds in physics, engineering, computer science, applied mathematics and data science will be offered at William & Mary. This summer course will include a close to equal distribution of traditional instruction and active projects. The traditional instruction will provide daily 80 min instruction in morning classes with a focus on computing, applied mathematics, machine learning and fusion energy. These classes will be based on existing classes offered in data science at W&M, such as databases, applied machine learning and deep learning, Bayesian reasoning in data science. These classes will be supplemented with a class focused on fusion energy for the applications the students will tackle during the hands-on component and for students’ summer research.[https://ai4fusion-wmschool.github.io/summer2024/intro.html]

- AI4EIC: The Electron-Ion Collider (EIC), a state-of-the-art facility for studying the strong force, is expected to begin commissioning its first experiments in 2028. This is an opportune time for artificial intelligence to be included from the start at this facility and in all phases that lead up to the experiments. Annual workshops are organized by the AI4EIC working group, centered on exploring all current and prospective application areas of AI for the EIC. Solutions discussed at AI4EIC provide valuable insights for the newly established ePIC collaboration at EIC and for Detector-2. AI4EIC also organizes outreach events such as hackathons, tutorials and schools. [paper: https://link.springer.com/article/10.1007/s41781-024-00113-4; website: https://eic.ai/; hackathons: LLM for PID https://arxiv.org/abs/2404.05752, and AI/ML for dRICH in EIC]. Other activities related to AI4EIC are development of a suite of tools for visualization of detector design tradeoff solutions on a Pareto front [https://ai4eicdetopt.pythonanywhere.com/] and development of an RAG-baed assistant on EIC science [https://arxiv.org/abs/2403.15729 and https://rags4eic-ai4eic.streamlit.app/]

- ELUQuant: Event-Level Uncertainty Quantification: We introduce a physics-informed Bayesian neural network with flow-approximated posteriors using multiplicative normalizing flows for detailed uncertainty quantification (UQ) at the physics event-level. Our method is capable of identifying both heteroskedastic aleatoric and epistemic uncertainties, providing granular physical insights. Applied to deep inelastic scattering (DIS) events, our model effectively extracts the kinematic variables x, Q2, and y, matching the performance of recent deep learning regression techniques but with the critical enhancement of event-level UQ. This detailed description of the underlying uncertainty proves invaluable for decision-making, especially in tasks like event filtering. It also allows for the reduction of true inaccuracies without directly accessing the ground truth. A thorough DIS simulation using the H1 detector at HERA indicates possible applications for the future electron–ion collider. Additionally, this paves the way for related tasks such as data quality monitoring and anomaly detection. Remarkably, our approach effectively processes large samples at high rates. [https://iopscience.iop.org/article/10.1088/2632-2153/ad2098, Mach.Learn.Sci.Tech. 5 (2024) 1, 015017]

- Flux+Mutability – Anomaly Detection: Anomaly Detection is becoming increasingly popular within the experimental physics community. At experiments such as the Large Hadron Collider, anomaly detection is growing in interest for finding new physics beyond the Standard Model. This paper details the implementation of a novel Machine Learning architecture, called Flux+Mutability, which combines cutting-edge conditional generative models with clustering algorithms. In the ‘flux’ stage we learn the distribution of a reference class. The ‘mutability’ stage at inference addresses if data significantly deviates from the reference class. We demonstrate the validity of our approach and its connection to multiple problems spanning from one-class classification to anomaly detection. In particular, we apply our method to the isolation of neutral showers in an electromagnetic calorimeter and show its performance in detecting anomalous dijets events from standard QCD background. This approach limits assumptions on the reference sample and remains agnostic to the complementary class of objects of a given problem. We describe the possibility of dynamically generating a reference population and defining selection criteria via quantile cuts. Remarkably this flexible architecture can be deployed for a wide range of problems, and applications like multi-class classification or data quality control are left for further exploration. [https://iopscience.iop.org/article/10.1088/2632-2153/ac9bcb/meta, 2022 Mach. Learn.: Sci. Technol. 3 045012]

- Streaming Readout: Current and future experiments at the high-intensity frontier are expected to produce an enormous amount of data that needs to be collected and stored for offline analysis. Thanks to the continuous progress in computing and networking technology, it is now possible to replace the standard ‘triggered’ data acquisition systems with a new, simplified and outperforming scheme. ‘Streaming readout’ (SRO) DAQ aims to replace the hardware-based trigger with a much more powerful and flexible software-based one, that considers the whole detector information for efficient real-time data tagging and selection. Considering the crucial role of DAQ in an experiment, validation with on-field tests is required to demonstrate SRO performance. In this paper, we report results of the on-beam validation of the Jefferson Lab SRO framework. We exposed different detectors (PbWO-based electromagnetic calorimeters and a plastic scintillator hodoscope) to the Hall-D electron-positron secondary beam and to the Hall-B production electron beam, with increasingly complex experimental conditions. By comparing the data collected with the SRO system against the traditional DAQ, we demonstrate that the SRO performs as expected. Furthermore, we provide evidence of its superiority in implementing sophisticated AI-supported algorithms for real-time data analysis and reconstruction [https://doi.org/10.1140/epjp/s13360-022-03146-z]

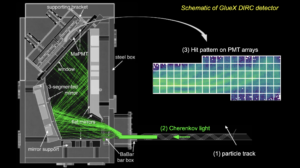

- Machine Learning for Particle Identification with Cherenkov detectors:

- Fanelli, Cristiano, and Jary Pomponi. “DeepRICH: learning deeply Cherenkov detectors.” Machine Learning: Science and Technology 1.1 (2020): 015010.” [https://iopscience.iop.org/article/10.1088/2632-2153/ab845a/]

- Fanelli, Cristiano, Giroux, James, Steven, Justin “Deep(er) Reconstruction of Imaging Cherenkov Detectors with Swin Transformers and Normalizing Flow Models”

Links, Repositories, Resources:

- W&M DataPhys: https://github.com/wmdataphys

- RAG-based EIC agent https://github.com/wmdataphys/EIC-RAG-Project

- AI4EICHackathon2023 (Streamlit) https://github.com/wmdataphys/AI4EICHackathon2023-Streamlit

- ELUQuant https://github.com/wmdataphys/ELUQuant

- GymDetector Design https://github.com/wmdataphys/GYM4DetectorDesign

- Deep(er)RICH https://github.com/wmdataphys/DeeperRICH

- Artificial Intelligence for the Electron Ion Collider (AI4EIC) https://eic.ai, https://github.com/ai4eic

- Jefferson Lab: https://www.jlab.org

- EIC User Group https://eicug.github.io/

- Electron-Ion Collider (EIC) Software https://github.com/eic